Voice and gesture control in Industry 4.0

To demonstrate the technology, the research team extended a surface inspection system developed by Fraunhofer IOSB. To this end, the existing system was supplemented with a speech dialog system from Fraunhofer IAIS for voice control and a microphone arrangement from Fraunhofer IIS for targeted voice recording in noisy environments.

Quality assurance is an important part of today’s production processes. When it comes to surface inspection, automatic visual inspection is often complex, time-consuming and cost-intensive to implement, and furthermore cannot always be performed in real time for the entire surface. Corners and angles are just a few examples that still cannot be fully inspected automatically today. Many companies therefore still rely on inspection by experienced employees who scan the surfaces, visually inspect them and document defective areas for reworking and statistical collection.

High documentation effort in quality assurance

Often, only a few seconds per component remain for quality assurance. Since the documentation effort is high depending on the software used, inaccurate or even incomplete documentation of existing defects occurs in such situations. If defects are incompletely documented, an accumulation of defects cannot be recognized and corrected at an early stage – the probability of defect slip and higher rework costs increases. Inaccurate documentation of defects also makes it difficult to find the defect items again in the rework process and works against a fast and complete repair.

The Multimodal Dialog Assistant enables completely digital defect documentation – and saves time

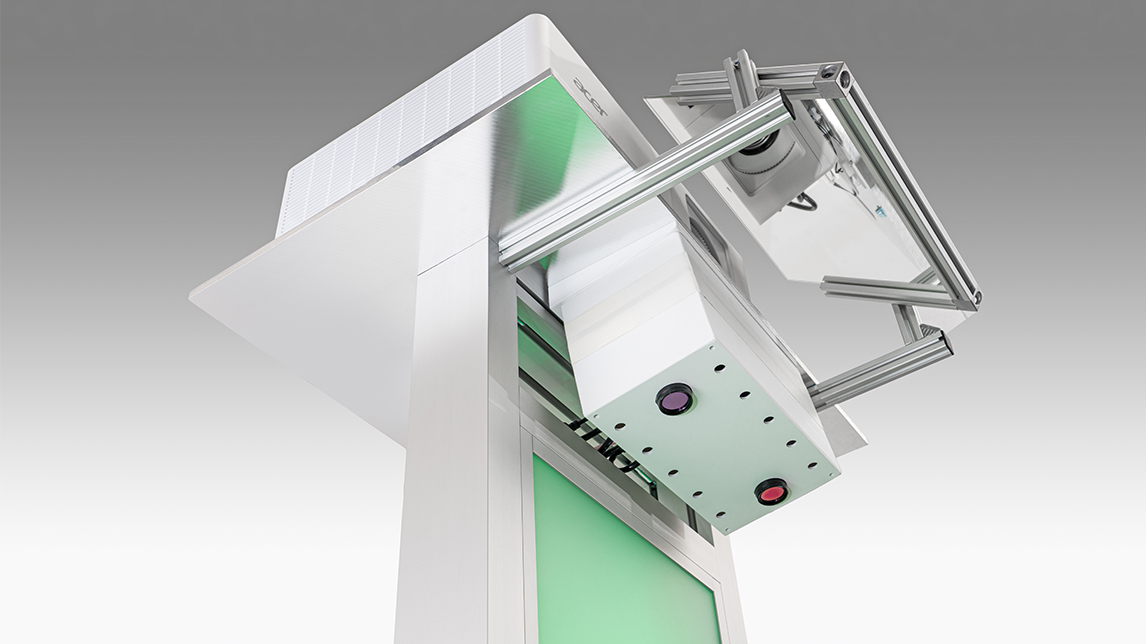

The system developed by Fraunhofer IOSB and modified in cooperation with Fraunhofer IAIS to become the Multimodal Dialog Assistant (MuDA) enables completely digital defect documentation in production – from marking to repair – with very little time expenditure. Users can choose between intuitive pointing gestures and a laser pointer as input method and mark defect locations on a component quickly, precisely, and intuitively. The pointing gesture, and thus the location of the defect, is captured by a camera unit mounted above the component to be inspected. A projector immediately shows the documented defects to the worker via a projected mark on the component. Metadata, such as the type of defect marked, is entered via a projected menu or a voice dialog system. In the touch-up phase, this defect marking can be precisely reproduced by digital imaging at another station and projected onto the part. This allows the defect to be quickly retrieved in the touch-up phase. Completion of the touch-up can also be confirmed directly on the component and noted in the system without much effort.

Video: Watch the Multimodal Dialog Assistant in action

Privacy warning

With the click on the play button an external video from www.youtube.com is loaded and started. Your data is possible transferred and stored to third party. Do not start the video if you disagree. Find more about the youtube privacy statement under the following link: https://policies.google.com/privacySpeech recognition and gesture control in one assistant: Gerrit Holzbach shows how to use the Multimodal Dialog Assistant for visual quality control.

Research Center Machine Learning

Research Center Machine Learning